본 LINK 랩 연구실 홈페이지는 "LINK 랩 연구원들의 연구 기록" 및 "연구/교육 자료 공유"를 목적으로 운영되고 있습니다.

본 LINK 랩 홈페이지가 심층 강화 학습 및 5G/6G 지능형 네트워킹 분야에 관심 있는 분들에게 도움이 되도록 하겠습니다.

심층 강화 학습 및 5G/6G 지능형 네트워킹과 더불어 사물 인터넷/운용 과학/금융 공학 등에 관심 있는 학생들은 본 LINK 랩 대학원생

본 LINK 랩 연구실 홈페이지는 "LINK 랩 연구원들의 연구 기록" 및 "연구/교육 자료 공유"를 목적으로 운영되고 있습니다.

본 LINK 랩 홈페이지가 심층 강화 학습 및 5G/6G 지능형 네트워킹 분야에 관심 있는 분들에게 도움이 되도록 하겠습니다.

심층 강화 학습 및 5G/6G 지능형 네트워킹과 더불어 사물 인터넷/운용 과학/금융 공학 등에 관심 있는 학생들은 본 LINK 랩 대학원생

컴퓨터 스스로 행동 또는 제어의 방식을 익히는 기계 학습의 한 분야

학습 속도 향상을 위한 멀티스텝 시간차 제어 알고리즘 개발

각종 게임 제어 알고리즘 개발

스타크래프트2 환경을 활용한 다중 에이전트 강화학습 연구 수행

다중 에이전트 협업/대결 알고리즘 개발

생성 모델에 기반한 비대칭 정보 게임의 승패 예측 모델 학습

게임상황 변화 대응 에이전트 개발을 위한 제어 기술 고도화

GAN을 사용한 생성 모델 기반 강화 학습

네트워크 모니터링, 데이터 분석, 자가 진단 및 정책 수립을 통한 지능형 네트워크 관리 프레임워크 연구 및 B5G/6G 네트워크의 자원 슬라이싱 최적화.

수학적 모델링이나 통계 분석, 최적화 기법등을 이용하여 복잡한 의사결정 문제에서 최적해 혹은 근사최적해를 찾아내며, 이익, 성능, 수익 등을 최대화하거나 손실, 위험, 비용 등을 최소화하는 현실적인 문제 해결 연구

동적 네트워크 토폴리지, 네트워크 트래픽 정보 수집 및 관리

딥러닝 추론 모델을 통한 네트워크 성능 및 비용 예측

딥러닝 정책 모델을 통한 네트워크 라우팅, 이동성, 보안의 자율 관리

강화 학습 기반 저비용/고효율 네트워크 자동 관리

다양한 요구사항을 동시에 만족시키는 멀티에이전트 강화학습 기반 네트워크 자원 슬라이싱 최적화

강화 학습 적용 TSP, Knapsack 등 전통적인 최적화 알고리즘 문제 해결

멀티 에이전트 강화 학습을 사용한 복잡한 최적화 문제 해결

개발한 강화 학습 기반 최적화 알고리즘을 5G/6G 네트워크나 스마트 팩토리 물류 분류 시스템에 적용

IoT 기기에 대한 지능화를 위한 프레임워크, 통신/네트워크 프로토콜, 딥러닝 및 강화학습 알고리즘 등을 연구하는 분야

IoT 기기의 정밀 비선형 제어

다중 IoT 기기에 대한 연합 강화 학습(Federated Reinforcement Learning)

강화 학습 모델의 다중 IoT 기기 사이의 모델 전이 학습(Transfer Learning)

디지털 트윈 및 실제 장비간의 모델 동기화

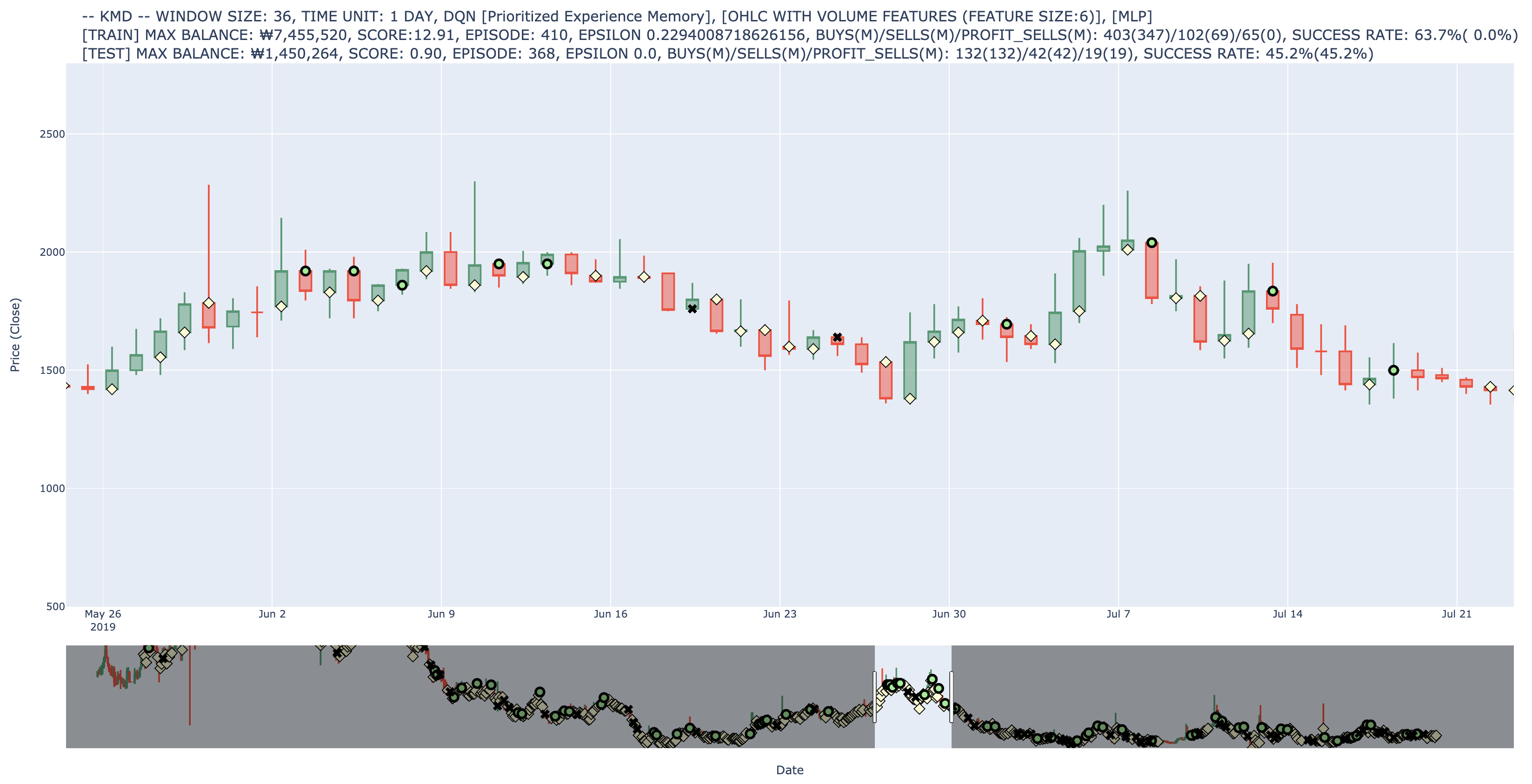

금융상품의 위험을 분석하고 리스크를 분산시키기 위해 기존의 방법을 개선하고 발전시키기 위하여 수학과 통계, 프로그래밍을 아우르는 전반적인 공학적 기술 적용 분야

주식 가격 데이터 수집, 저장, 전처리 작업

주식 가격 데이터 예측을 위한 딥러닝 모델 개발

강화 학습 기반 자동 거래 알고리즘 및 시스템 개발