Temporal Difference Learning - SARSA & Q-Learning

LINK@KoreaTech

Febraury, 14, 2019

Email: link.koreatech at gmail.com

This site is made by using the source codes shared from the site, REINFORCEjs

Febraury, 14, 2019

Email: link.koreatech at gmail.com

This site is made by using the source codes shared from the site, REINFORCEjs

SARSA

- Sum of all state values: -1

- Total number of steps over all episodes: -1

- Status: RESET

- Epsilon: 0.2

- Discount Factor (γ): 0.75

- Initial epsilon value for ε-greedy policy (ε): 0.2

- Epsilon decay rate (η): 0.02

- Learning rate (α): 0.1

Sum of each step's reward over episodes:

Episode: 0

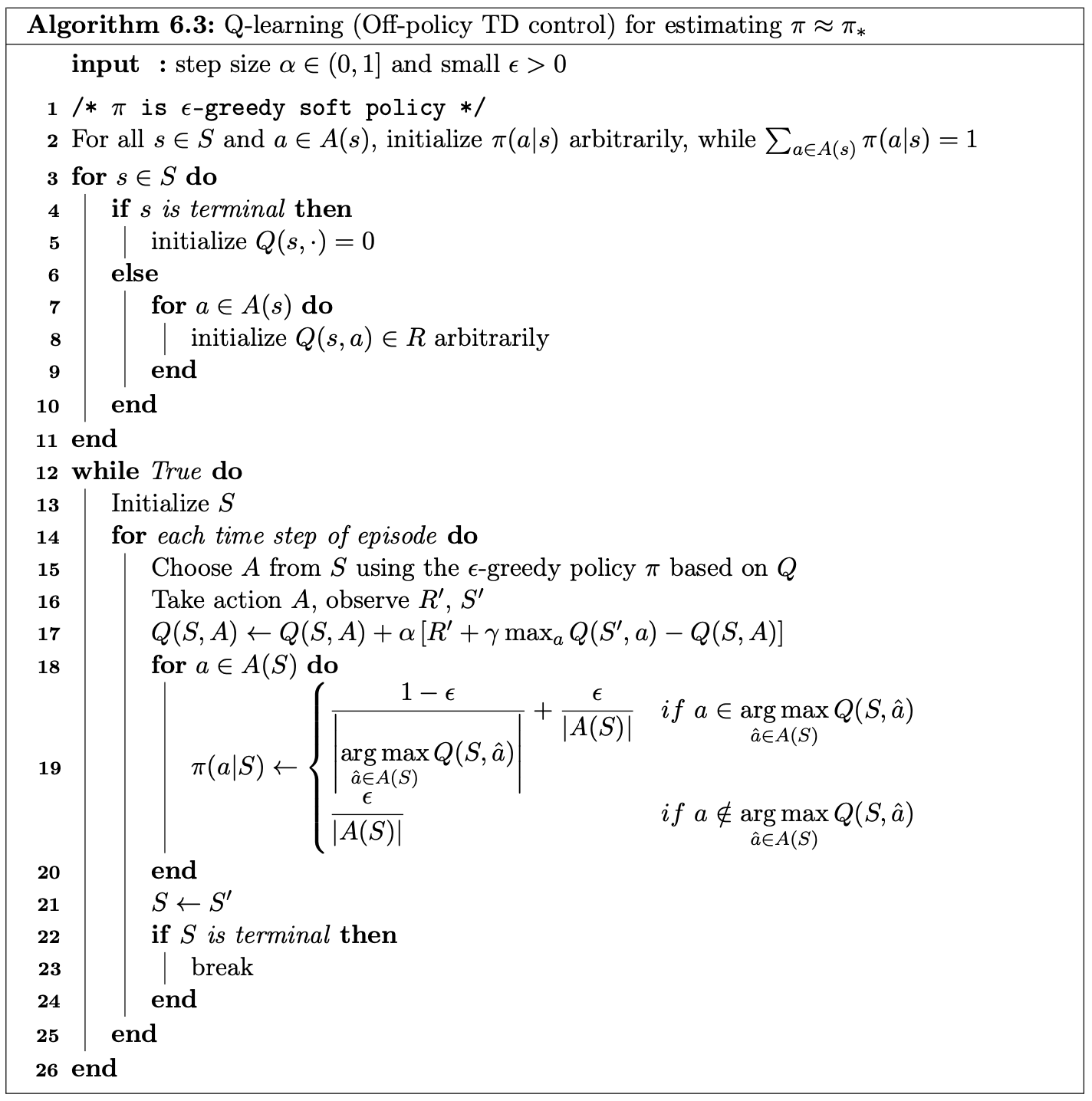

Q-learning

- Sum of all state values: -1

- Total number of steps over all episodes: -1

- Status: RESET

- Epsilon: 0.2

- Discount Factor (γ): 0.75

- Initial epsilon value for ε-greedy policy (ε): 0.2

- Epsilon decay rate (η): 0.02

- Learning rate (α): 0.1

Sum of each step's reward over episodes:

Episode: 0